They Wear Our Faces: How Scammers Are Using AI to Swindle American Families

This October, Bitdefender launched They Wear Our Faces, a national awareness campaign exposing how scammers exploit trust and relationships by using AI to deceive and impersonate.

The campaign highlights a growing crisis in the United States, where deepfake technology and personalized scams are rapidly dominating the criminal mainstream. By dramatizing how bad actors can clone voices, mimic faces, and impersonate loved ones, They Wear Our Faces challenges the stigma that victims are to blame — and opens the conversation about what protection really means in an age of AI-driven fraud.

Why This Matters

The United States has become the world’s primary hunting ground for scammers. The Federal Trade Commission (FTC) reports that Americans alone accounted for more than $12.5 billion in reported fraud losses in 2024, a dramatic increase from previous years.

Unlike most other countries, scams in the US use every possible channel—email, SMS, social media, and even seemingly legitimate digital ads. For American consumers, distinguishing between genuine offers and fraudulent pitches has never been more challenging.

What Bitdefender Telemetry Reveals

Bitdefender data confirms this. Between March and September 2025, the United States received nearly 37% of global spam, making it the world’s primary target. Within those emails, 45% of the global spam received by Americans was fraudulent or malicious. The leading threats flagged by Bitdefender Antispam Lab were scams, such as account and financial phishing, advance fee fraud, extortion attempts, and even dating scams. The most impersonated brands reflect the everyday services Americans rely on: Microsoft (18%), Costco (14%), Amazon (12%), American Express (10%), and DocuSign (10%) were among the top lures used to trick users into clicking malicious links or sharing sensitive data.

SMS Scams: A Growing Concern for Consumers in the United States

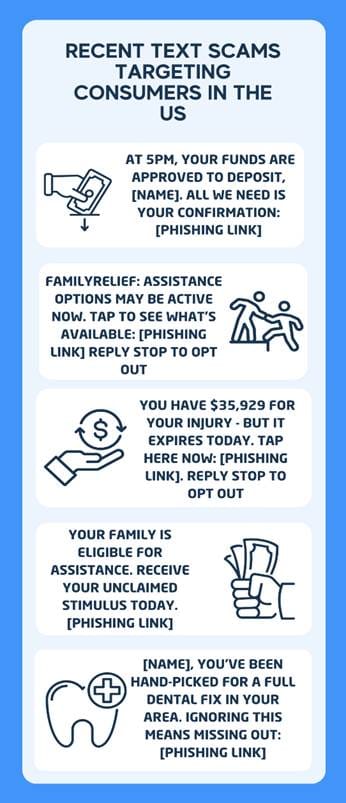

SMS scams are also on the rise. Bitdefender telemetry shows that 10% of US users received at least one SMS scam in the past two months. What makes SMS scams in the United States particularly dangerous is their advanced level of personalization and sophistication. While scams in Europe or Canada often rely on obvious brand impersonation and straightforward attempts to steal card details, campaigns in the United States frequently mimic legitimate aggressive marketing tactics so convincingly that even cautious users may struggle to tell the difference. Scammers use randomized domains, urgent calls to action, and even the recipient’s name in the text message to capture attention, blurring the line between a real promotion and attempted fraud. This heightened realism makes SMS scams targeting American consumers uniquely deceptive and harder to detect. The top topics flagged between August and September 2025 include:

- Finance (26%)

- Government (14%)

- Entertainment (11%)

- Healthcare (9%)

- Insurance (5%)

- Delivery (3%)

- Prize notifications (3%)

Examples include urgent notifications like:

By imitating official programs, financial institutions, or urgent prize alerts, these SMS scams blur the line between legitimate marketing and criminal manipulation, making them particularly hard to spot.

Beyond Email and SMS: Scam Campaigns Supercharged by AI

Bitdefender Labs has also tracked US-focused scam and malware campaigns leveraging AI and social engineering. Our Investigations have uncovered:

- Malvertising campaigns on Meta that distributed advanced crypto-stealing malware to Android users.

- Weaponized Facebook ads exploiting cryptocurrency brands in multi-stage malware campaigns.

- Sophisticated LinkedIn recruiting scams run by the Lazarus Group.

- Fake Facebook ad campaigns impersonating Bitwarden, combining brand abuse with malware delivery.

How AI Has Changed the Scam Business

Artificial intelligence has fundamentally reshaped the economics of scamming. What once required teams of fraudsters and weeks of preparation can now be executed in minutes with freely available tools. In short, AI makes scams faster, cheaper, and more convincing.

Recent incidents highlight just how dangerous AI-fueled scams have become:

- The FBI recently warned that scammers impersonated US officials using deepfake technology in coordinated scam campaigns.

- Threat actors have also used AI-generated medical experts in deepfake videos to push bogus treatments and healthcare scams, as reported by Bitdefender Labs.

- In Hong Kong, criminals used a deepfake video call of a CFO to trick a company into transferring more than $25 million to their accounts.

Bitdefender research has documented additional similar abuses, including deepfake videos of Donald Trump and Elon Musk in looped “podcast” formats, often promoting bogus crypto investments. Scammers also build on old “giveaway” scams, tricking victims into sending cryptocurrency or handing over personal data in exchange for fake rewards.

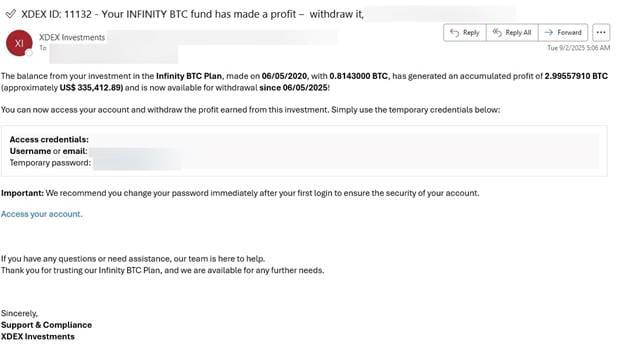

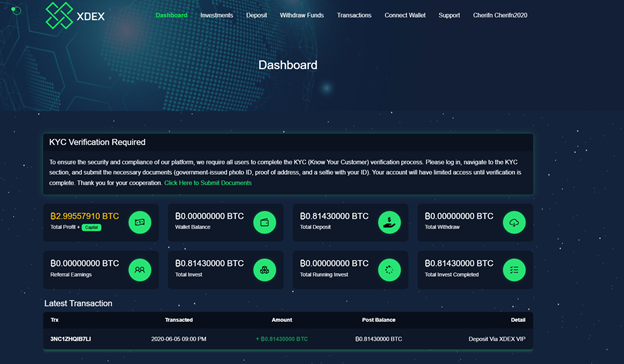

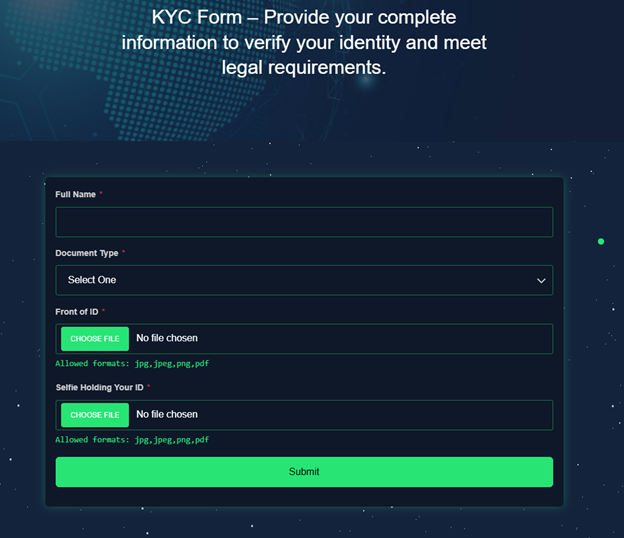

A recent case investigated by Bitdefender illustrates this risk: victims received an email from a fake investment service, XDEX Investments, claiming they had earned over 2.99 BTC in profits from an old “Infinity BTC Plan.” The fraudulent site lured users to log in with temporary credentials, prompting them to complete a KYC (Know Your Customer) form that demanded not only personal details but also a selfie while holding their government-issued ID.

This tactic is especially dangerous in the context of AI-enabled scams and deepfake impersonations. Once criminals obtain such biometric data — a high-quality photo of a face alongside official documents — it can be used to fuel impersonation scams, generate deepfakes, or bypass facial recognition systems. Essentially, scammers are tricking victims into providing the perfect raw material for the next generation of AI-driven fraud.

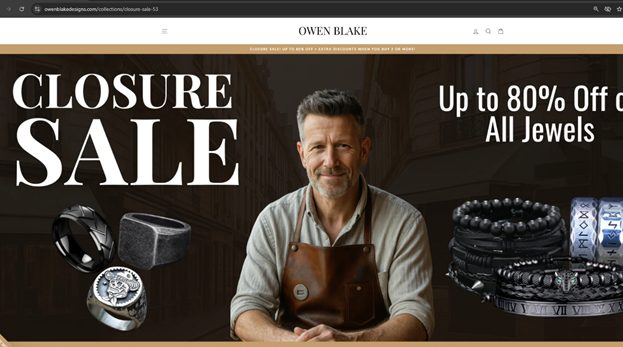

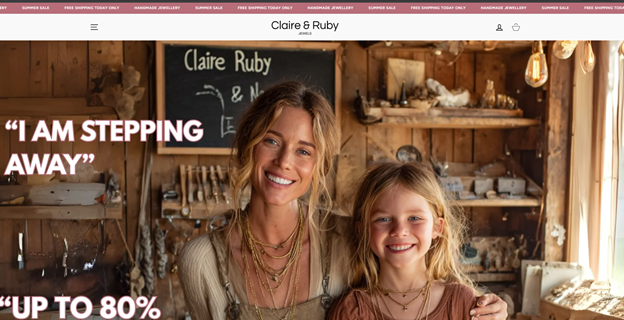

At the same time, other AI-powered scam trends continue to emerge. In the US, Bitdefender has flagged a scam campaign using AI-generated photos and emotional ad copy used in “closure sales,” where fake websites create urgency around disappearing deals. With AI, even non-English-speaking cybercriminals can effortlessly generate polished English content, making their scams more convincing than ever.

Here’s an example of how easy it is to generate a deepfake video starting from just one screenshot: